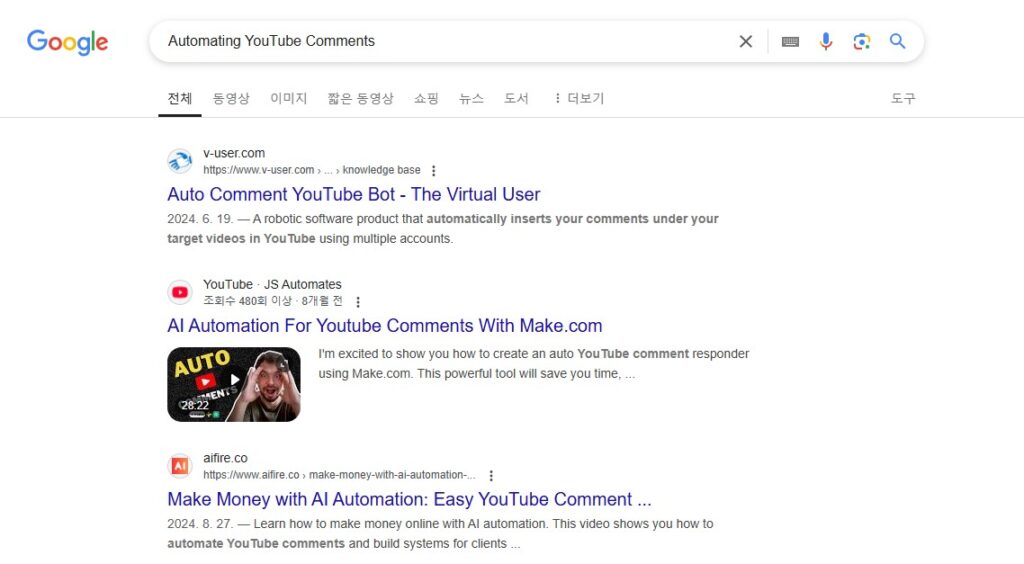

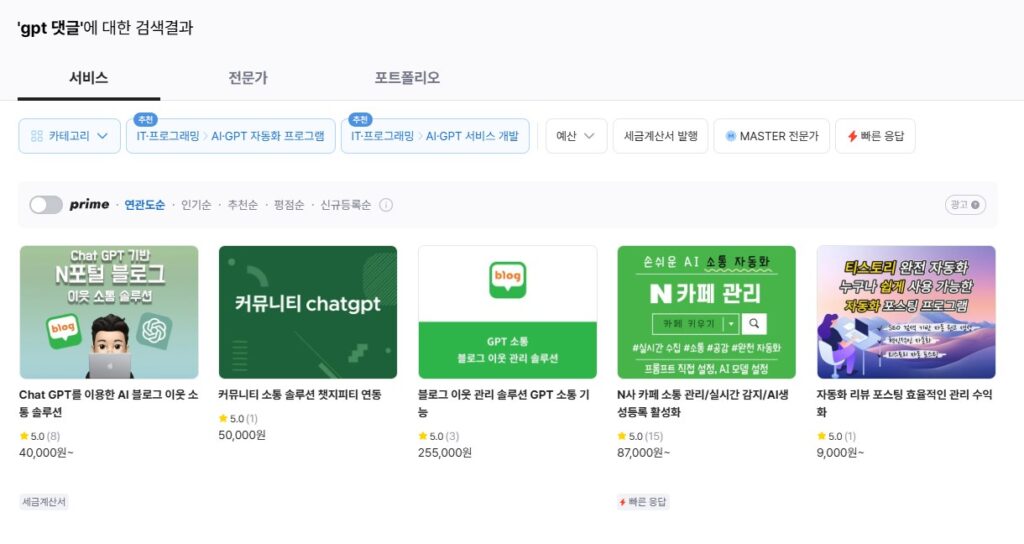

The misuse of comment bots to manipulate public opinion is emerging as a social issue. Comment bots are technologies that use artificial intelligence and automated programs to generate large numbers of comments or spread specific opinions. However, concerns are growing that if these comment bots are used maliciously, they could distort public opinion or create misleading narratives about particular topics.

During the Texas House Speaker election in the United States, automated bots were found to be generating large volumes of comments criticizing a particular candidate and supporting the opposing candidate. Data analysis revealed that all of these comments were systematically generated by automated accounts. This incident is considered a representative example of how social media can be exploited for public opinion manipulation.

Additionally, Russia was found to be conducting a campaign using the comment sections of various media outlets in the United States and the United Kingdom to spread pro-Trump conspiracy theories and pro-Kremlin narratives. Researchers identified 194 suspicious accounts, which were disseminating consistent messages with the intention of manipulating public opinion. This case highlights once again the potential for comment bots to be used for political purposes.

In Scotland, during a government consultation, attempts were made by automated bots to manipulate public opinions on major issues. Patterns were identified where dozens of responses were submitted within a short period from the same IP address, showing the risk of distorting discussions surrounding certain issues.

In response, major countries worldwide are actively establishing legal frameworks and regulatory measures to address the problem of public opinion manipulation via comment bots. The United States is taking measures through the Department of Justice and the Global Engagement Center (GEC) under the State Department to prevent the spread of foreign disinformation. The European Union (EU) has implemented the Digital Services Act (DSA) to strengthen regulations preventing the spread of illegal content and misinformation. Additionally, Singapore has established the Protection from Online Falsehoods and Manipulation Act (POFMA), providing legal authority to enforce the deletion and correction of false information.

Experts warn that the problem of public opinion manipulation through comment bots is likely to worsen. Particularly concerning is the advancement of AI technology, which enables automated comments to accurately grasp context and compose natural sentences, making detection increasingly difficult.

Therefore, platform operators and governments need to strengthen technological detection systems to prevent public opinion manipulation by comment bots. Users also need to enhance their media literacy education to critically analyze the sources of information online. As public opinion manipulation through comment bots emerges as a serious issue undermining social trust, stronger countermeasures continue to be required.