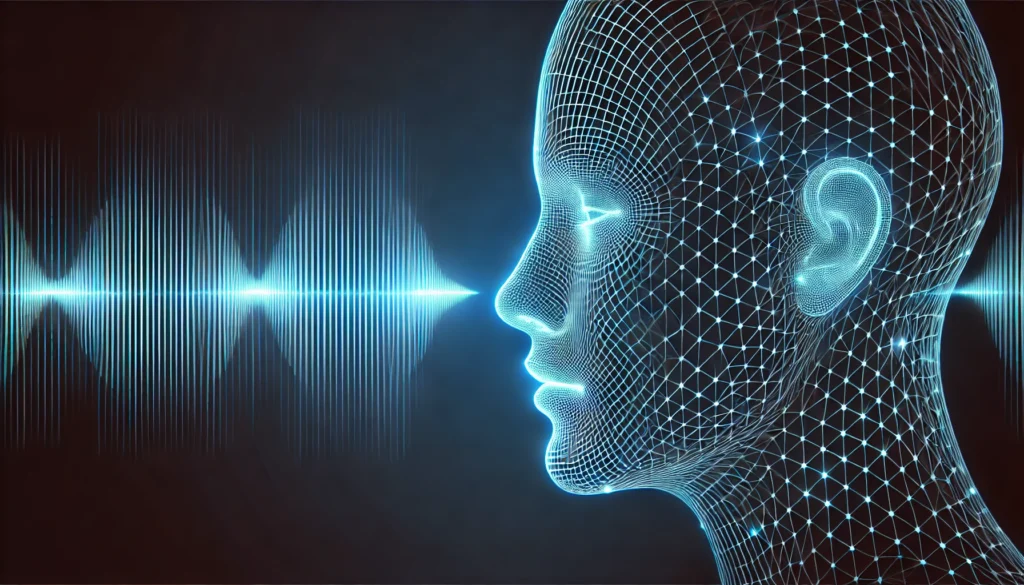

With the recent advancement of artificial intelligence (AI) technology, voice cloning has become a reality, raising concerns about its misuse in voice phishing crimes. AI voice cloning can accurately mimic a specific person’s voice with just a few seconds of audio samples, even replicating emotional expressions. While this technology was originally developed for positive applications such as voice assistants and audiobook narration, cases of its use in financial fraud and identity theft have been increasing in recent years.

Voice phishing has long been a serious social problem. However, as AI voice cloning technology becomes increasingly commercialized, more sophisticated attacks and an expansion in the scale of damage are expected. In the past, scammers directly made phone calls to deceive victims using social engineering techniques. Now, AI can replicate the voices of victims’ family members or friends, making it possible to conduct more convincing scams. In other words, biometric engineering techniques have been added to voice phishing attacks.

According to a report by the New York Post last October, an elderly man in California, USA, was scammed out of $25,000 after being deceived by an AI-cloned voice of his son. The scammer fabricated an emergency situation, claiming that the victim’s son had been in a car accident and needed bail money, leading the victim to transfer the funds.

Voice phishing using AI voice cloning is not only a financial fraud issue but also poses a significant threat to corporate security. In 2019, a CEO of a UK energy company was tricked into transferring €220,000 after being deceived by an AI-generated voice mimicking his parent company’s CEO. The scammer used AI technology to manipulate the voice to sound like a legitimate command from the actual CEO, resulting in financial losses for the company. Moreover, such attacks pose a high risk beyond financial fraud, potentially leading to corporate espionage and the leakage of technological secrets.

To mitigate the risks of crimes combining AI voice cloning and voice phishing, proactive measures must be taken at both the individual and corporate levels. Individuals should establish secret codes with close family and friends for emergencies and always verify unexpected financial requests by directly contacting the person in question. Additionally, it is crucial to avoid excessive sharing of personal voice or video content on social media, as AI training data can easily be extracted from publicly available videos and audio recordings.

Companies and financial institutions must enhance multi-layered security procedures for voice authentication and strengthen real-time monitoring of suspicious financial transactions. Furthermore, businesses should conduct phishing training using AI-generated voices to educate employees on identifying and responding to such attacks.

The advancement of AI voice cloning technology offers significant potential benefits for everyday life, but it simultaneously introduces new and more powerful forms of cybercrime. Heightened public awareness and preventative measures are more important than ever. As AI technology continues to develop rapidly, its associated threats grow as well. Therefore, both individuals and businesses must remain vigilant and actively implement strategies to counter these emerging risks.